DATA_FAIR, a Data Engineering and Data Science Conference

I spent an incredible day at the DATA_FAIR, a conference dedicated to fostering an inclusive environment for knowledge exchange, networking and upskilling in data engineering and data science.

It was a day packed with learning from my peers, meeting new like-minded individuals and exchanging experiences. The focus of the conference was on practical applications of data engineering technologies, current and emerging trends in ML and AI, followed by a round-table discussion about ethical data engineering.

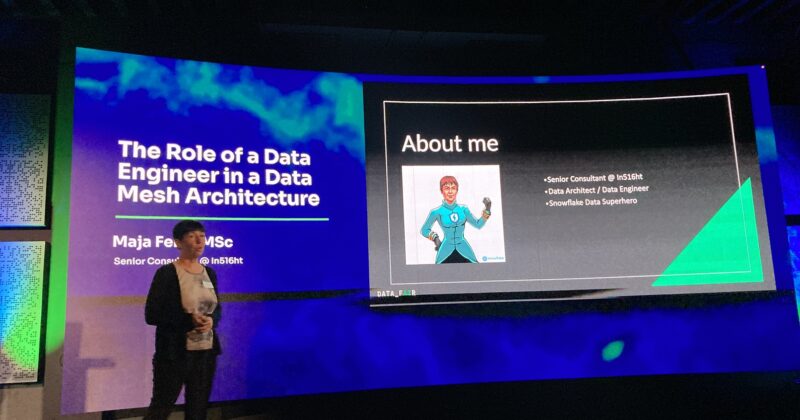

My contribution to the conference was to speak about "The Role of a Data Engineer in a Data Mesh Architecture". I explained the traditional data warehousing architecture, the challenges of this architecture that include long time to delivery, low flexibility, and dependence on the IT department for implementation. Because the ability to use data for decision-making is critical to company success, companies should empower their employees with easy access to the data they need.

According to Zhamak Dehghani, the founder of Data Mesh, we must start thinking outside...